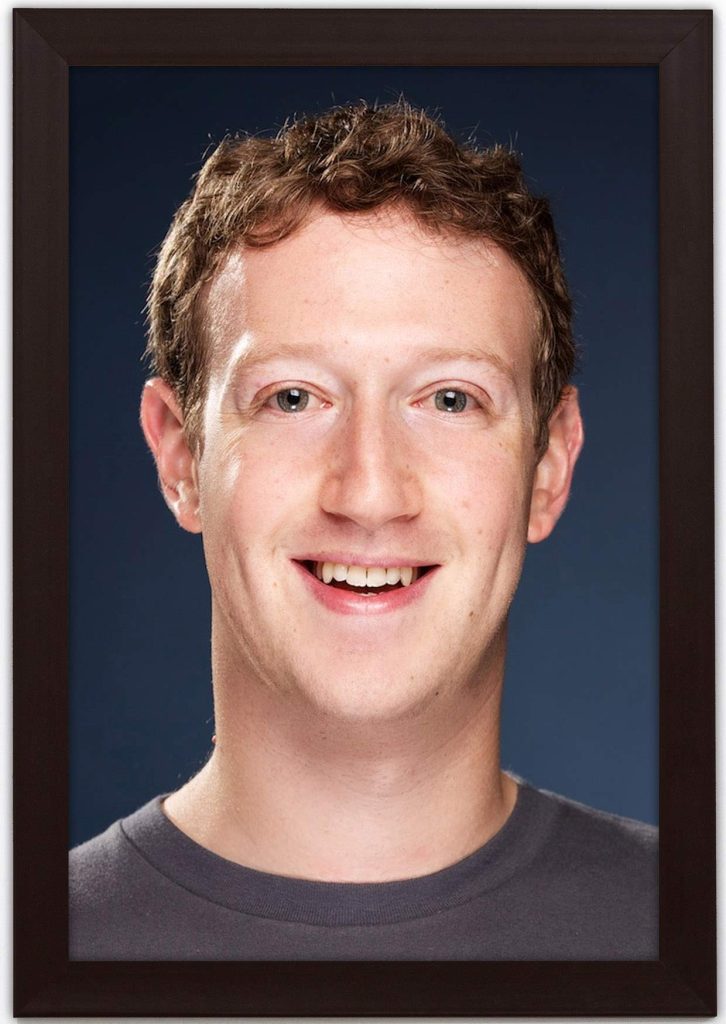

In 2026, Meta CEO Mark Zuckerberg found himself embroiled in a serious AI safety case that raised significant ethical questions about the technology his company developed. As artificial intelligence systems became increasingly integrated into everyday life, concerns grew over their safety, transparency, and potential for misuse. A particular incident involving a malfunctioning AI application intensified scrutiny, sparking investigations into Meta’s practices.

Critics argued that the company prioritized profit over safety, neglecting to implement adequate safeguards. The case prompted discussions about regulatory frameworks and best practices for AI development. Zuckerberg faced mounting pressure from lawmakers and advocates who demanded accountability and stronger oversight in the tech industry.

In response, Meta initiated a series of reforms aimed at improving AI protocols, addressing public fears, and restoring trust. The controversy also ignited broader conversations about the ethical implications of AI, emphasizing the need for collaboration between tech giants, regulators, and civil society to ensure safe innovation.

For more details and the full reference, visit the source link below: